A common job in orchestration is to run a python or R script within a pipeline. To achieve this, one can run scripts using Azure Data Factory (ADF) and Azure Batch.

The following is an example on how to run a script using ADF and Azure Batch. Before starting, make sure you have and batch account and a pool, and a storage account.

Say you would like to run the script helloWorld.py in ADF using Azure Batch:

print('hello world')

To run this script in a pipeline:

-

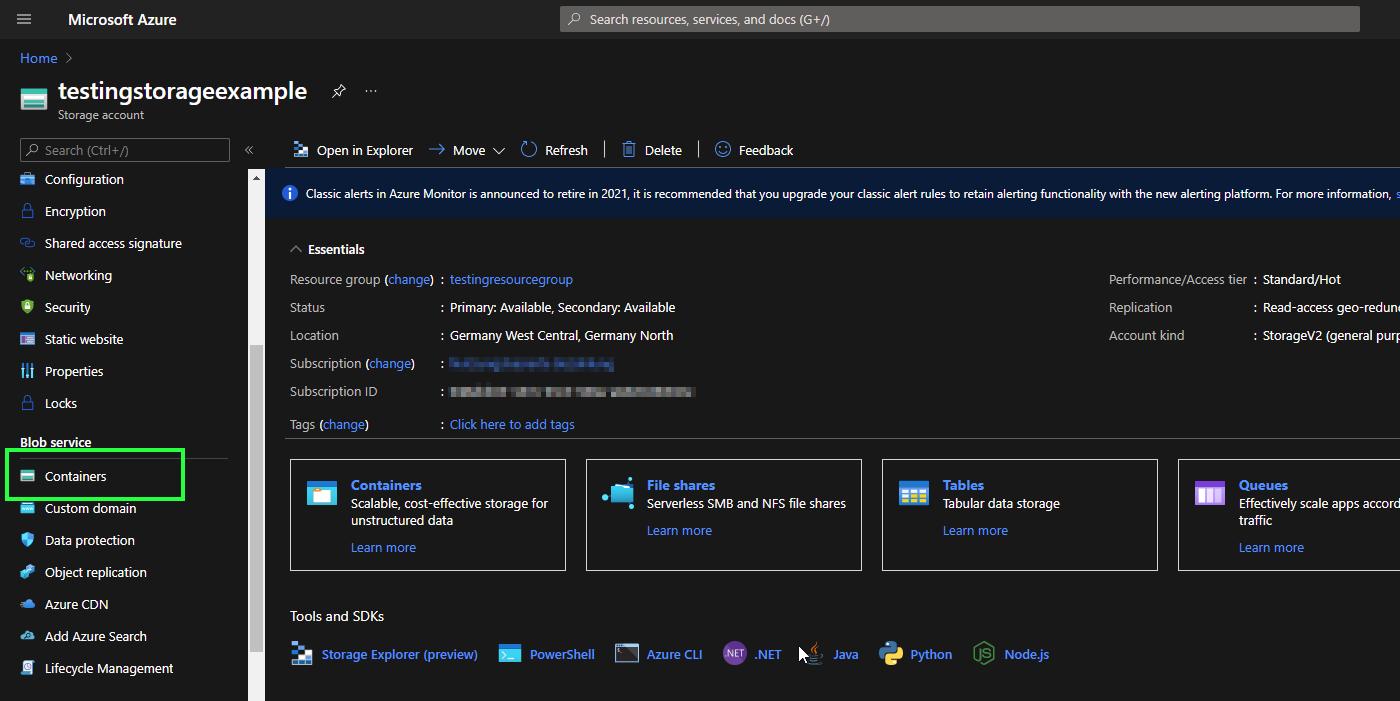

From Azure Batch, go to Blob service > Containers

-

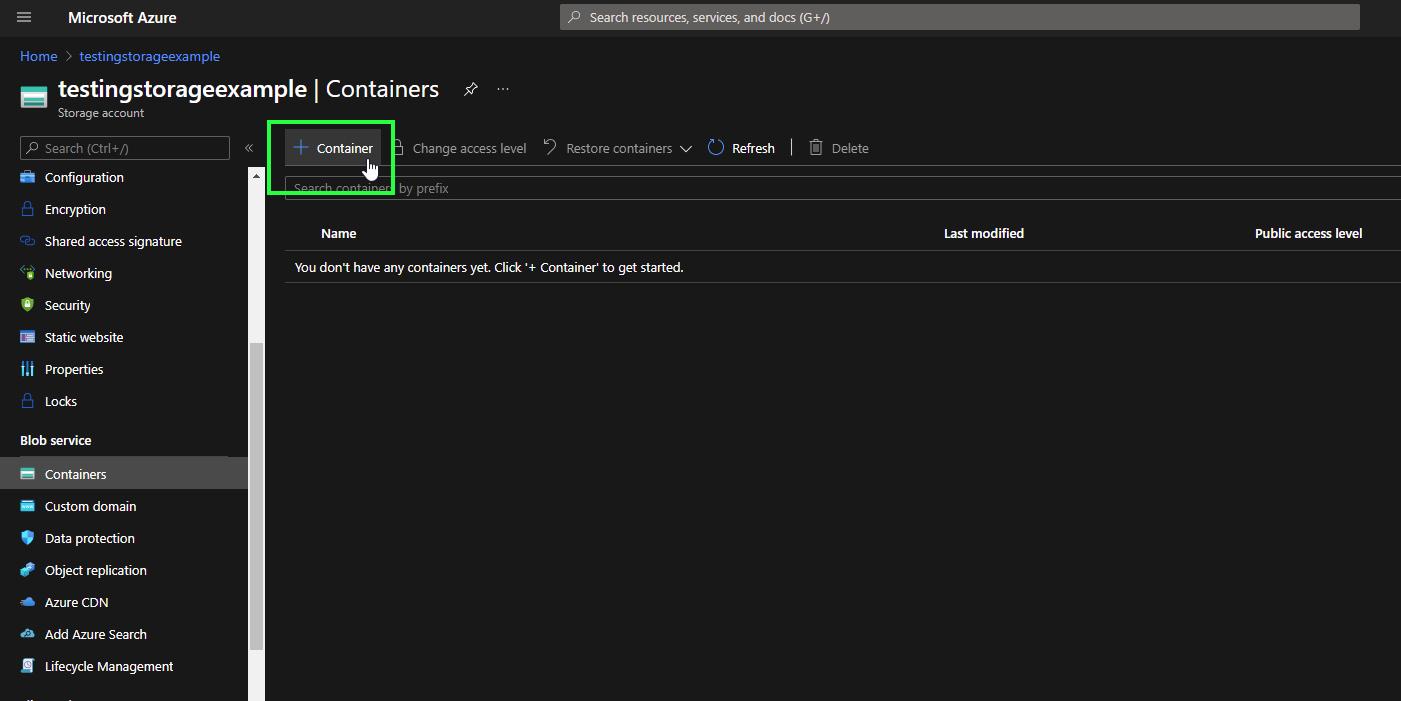

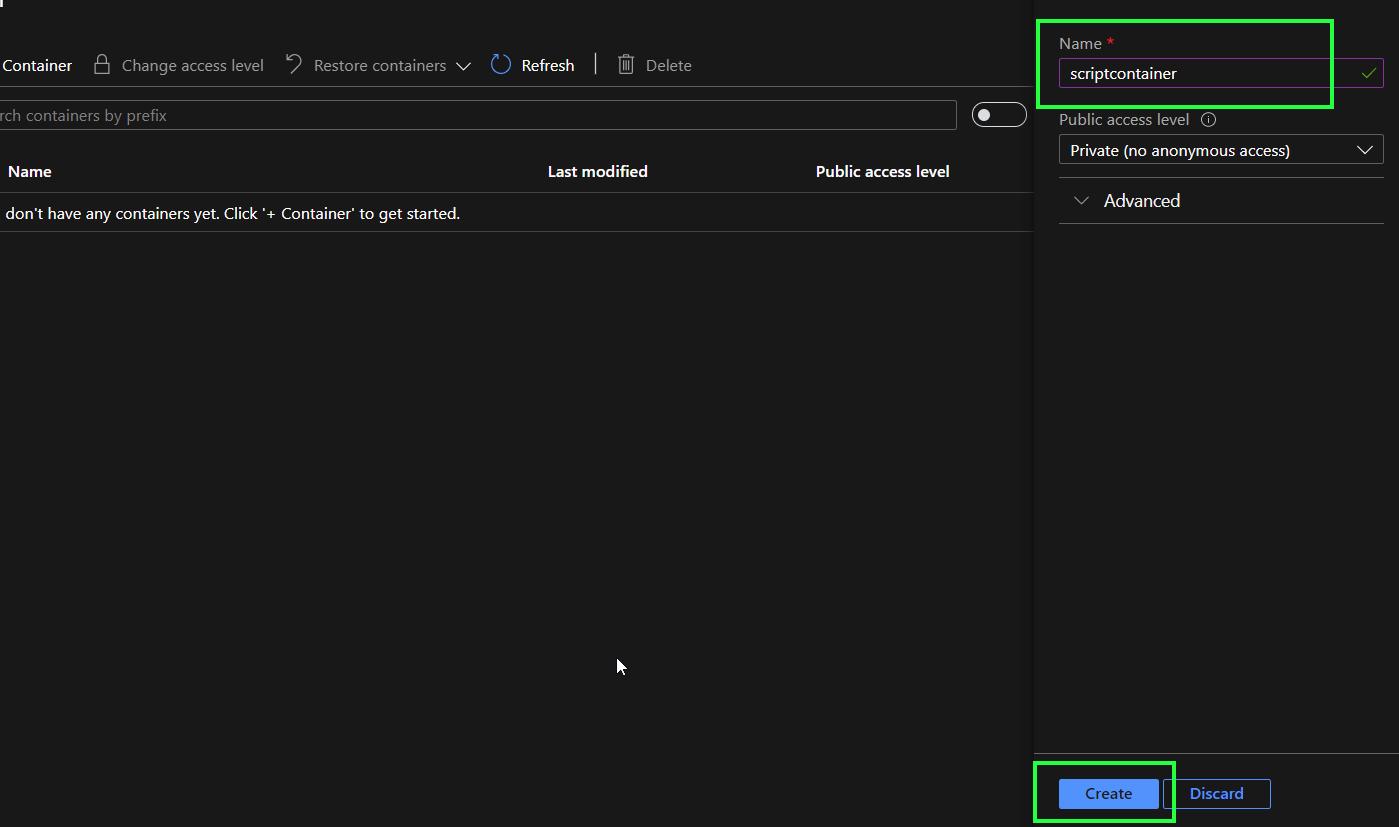

Click on + Container

-

Name your new script container and click on Create

-

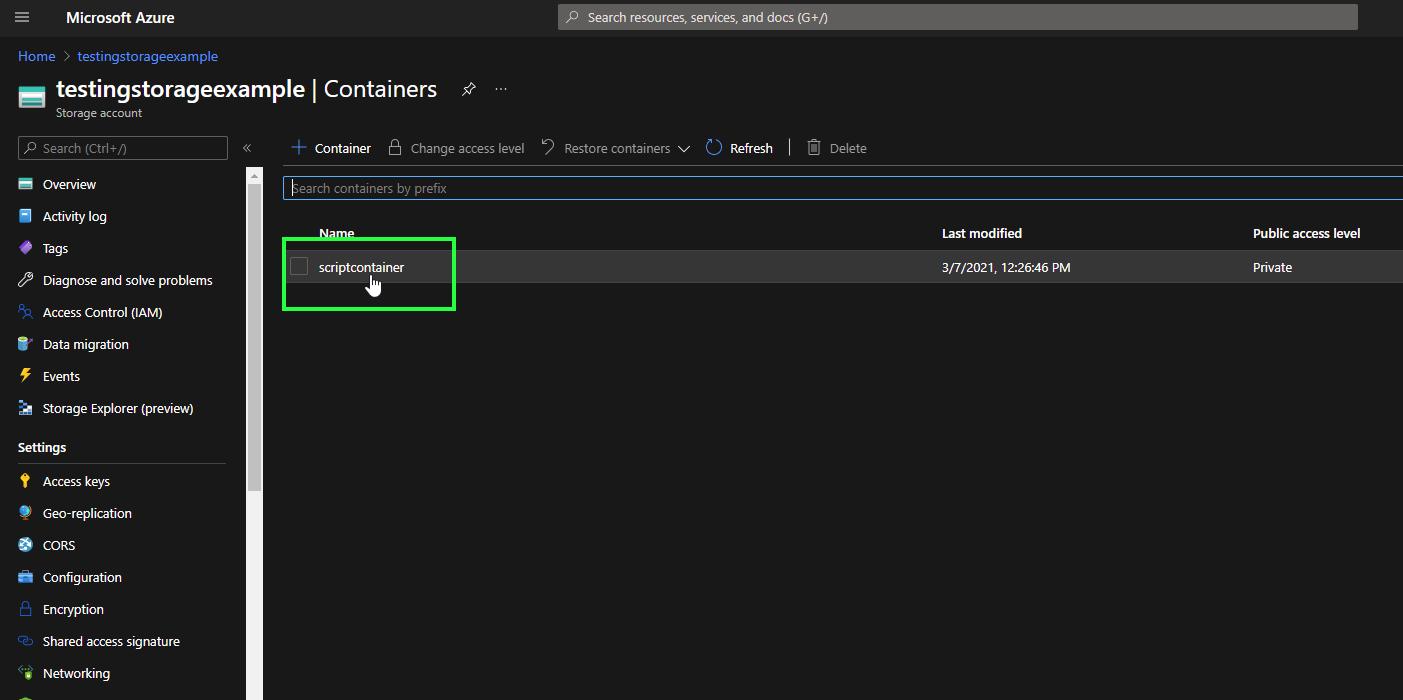

Access the script container

-

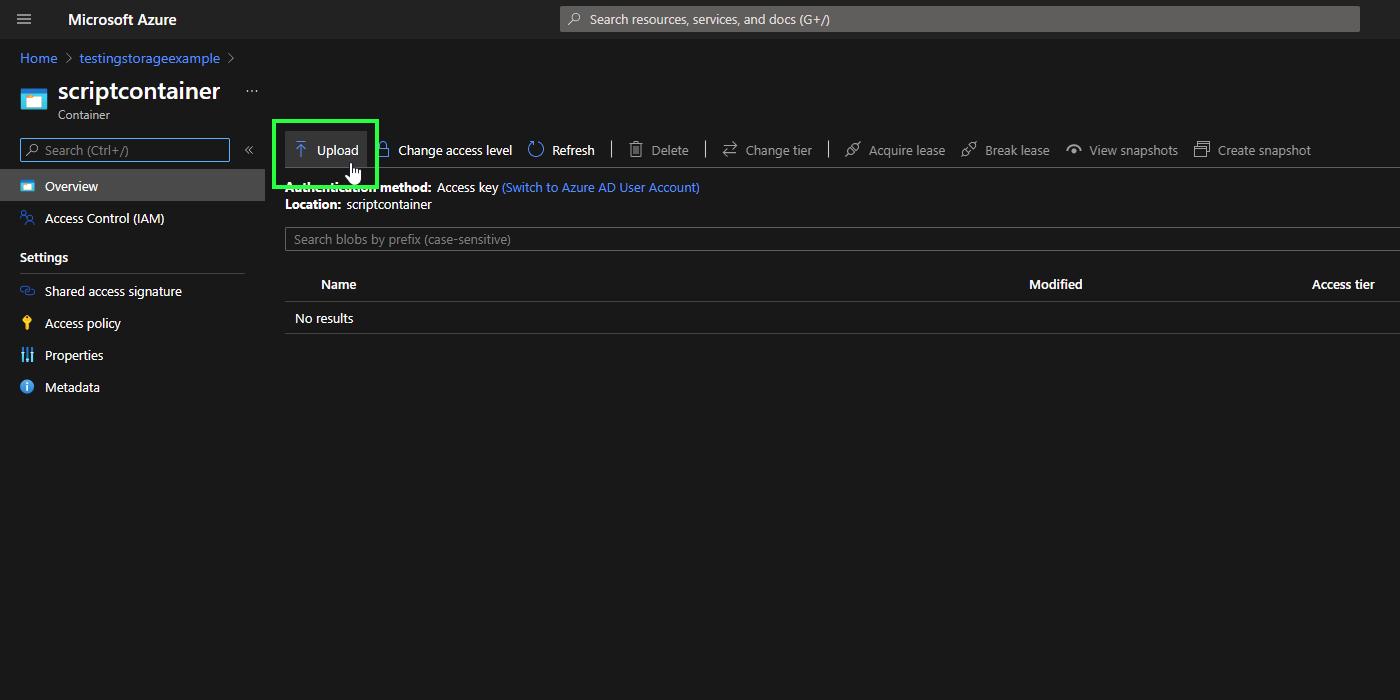

Click on Upload

-

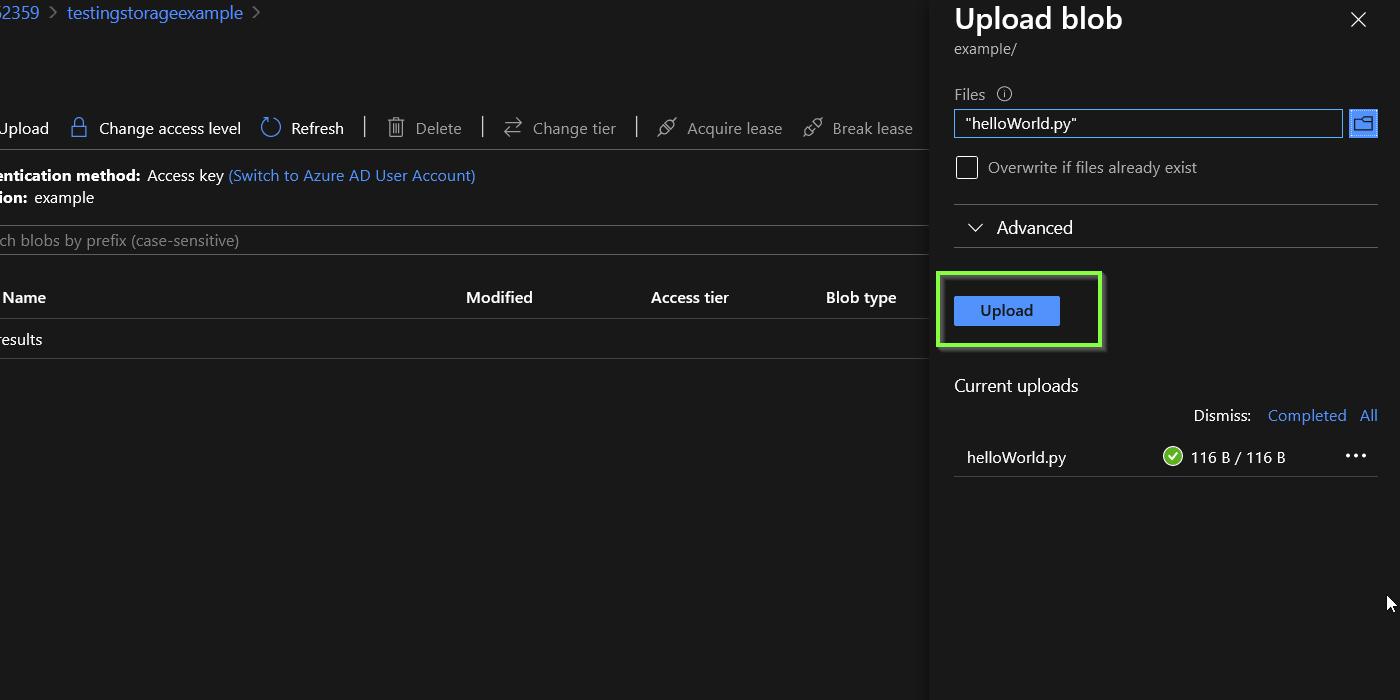

Locate the script helloWorld.py in your local folders and upload

-

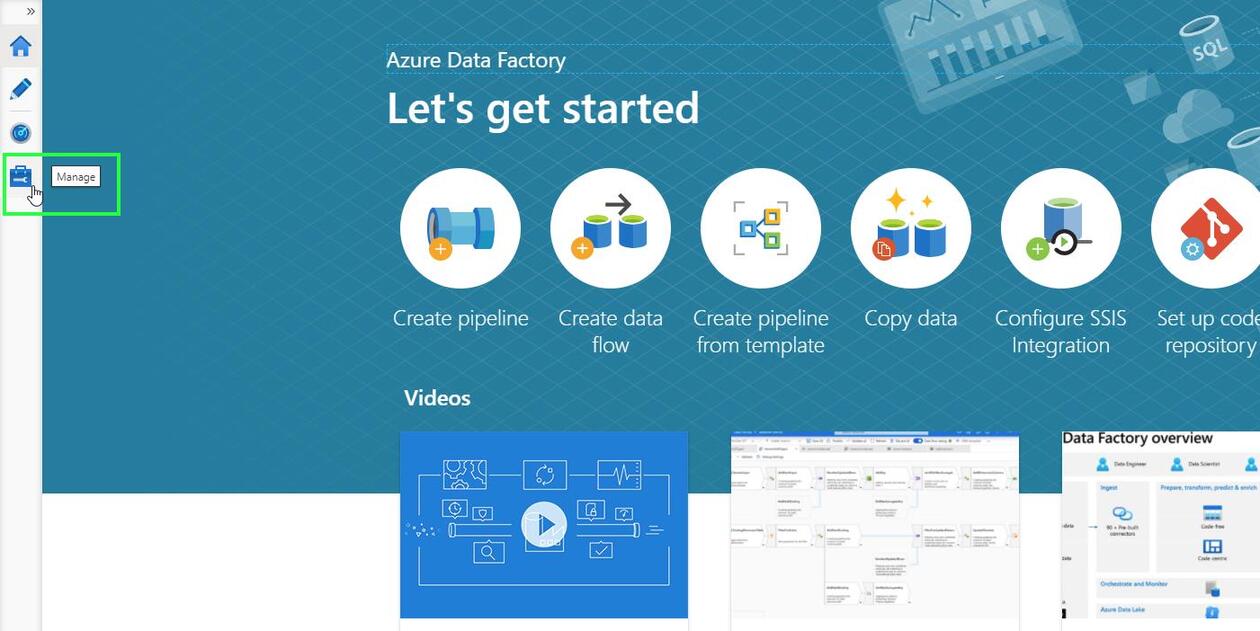

Navigate to the ADF portal. Click on Manage

-

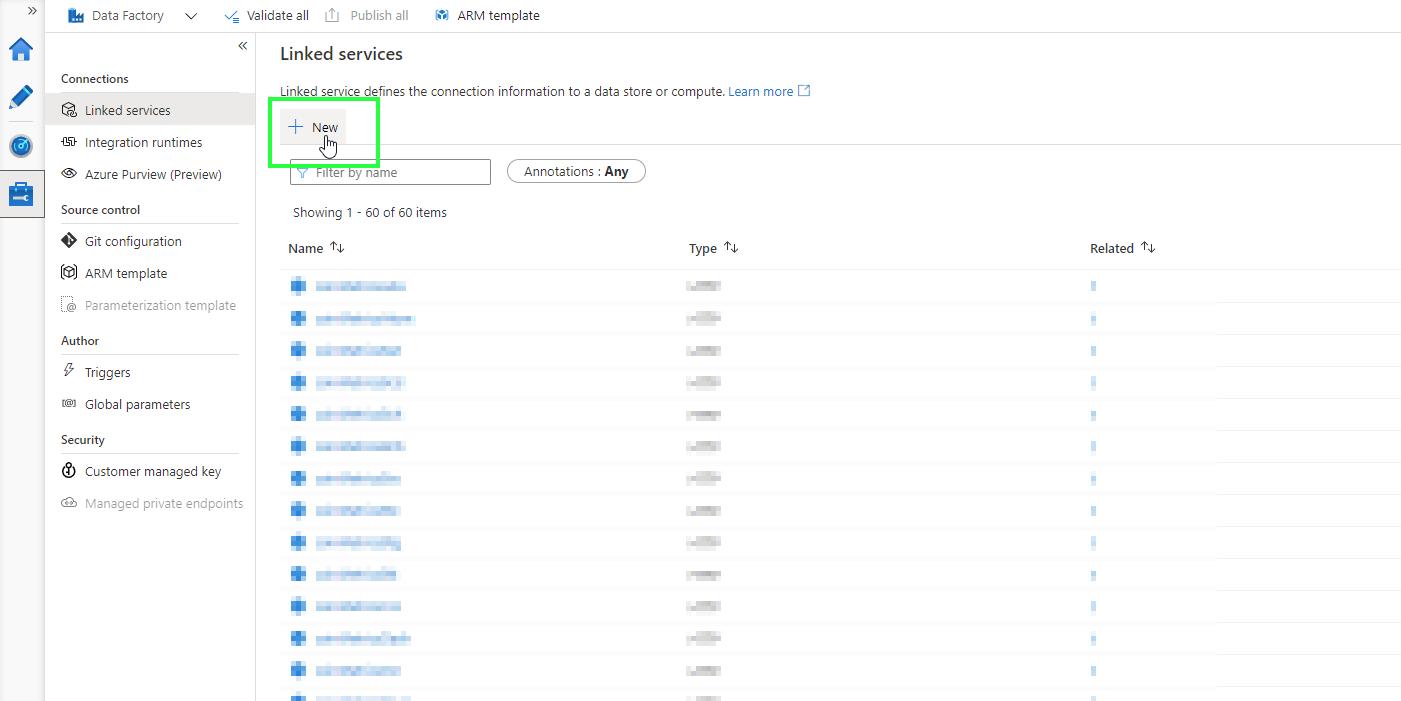

Go to Linked services > + New

-

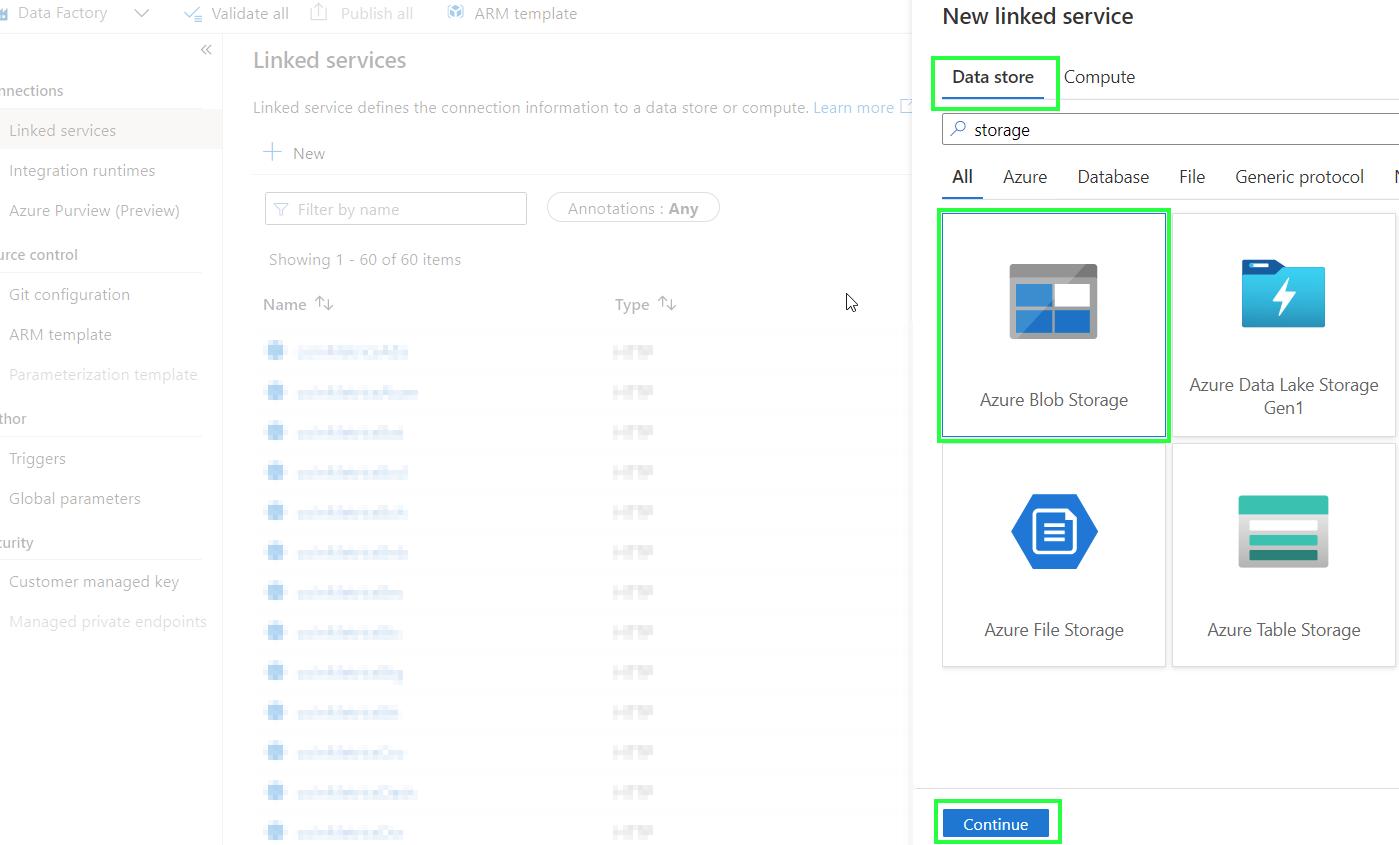

Under Data store, select Azure Blob Storage > Continue

-

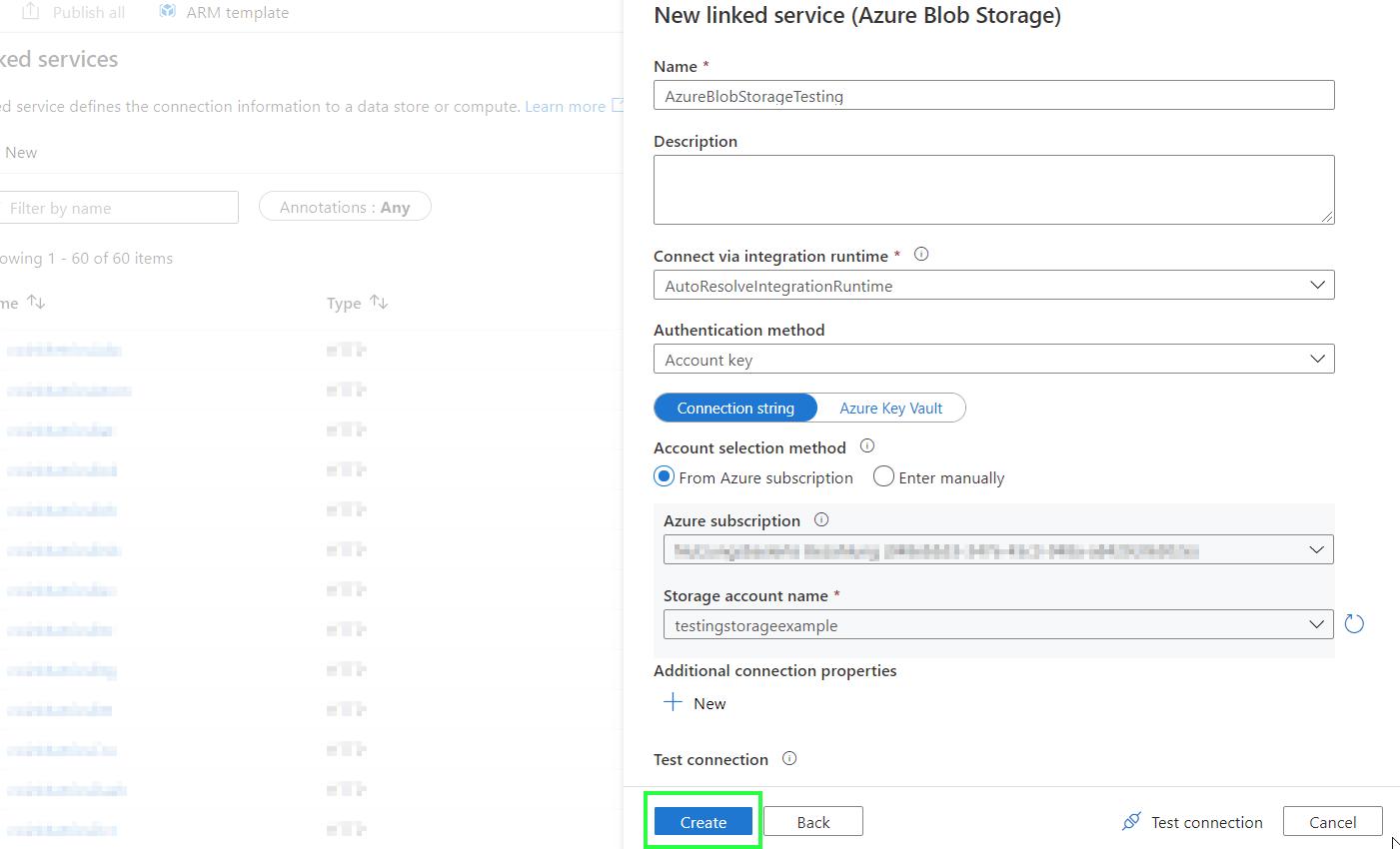

Name the linked service. Select the Azure Subscription under which the storage account was created. From the drop-down menu, select your Storage account name > Create

-

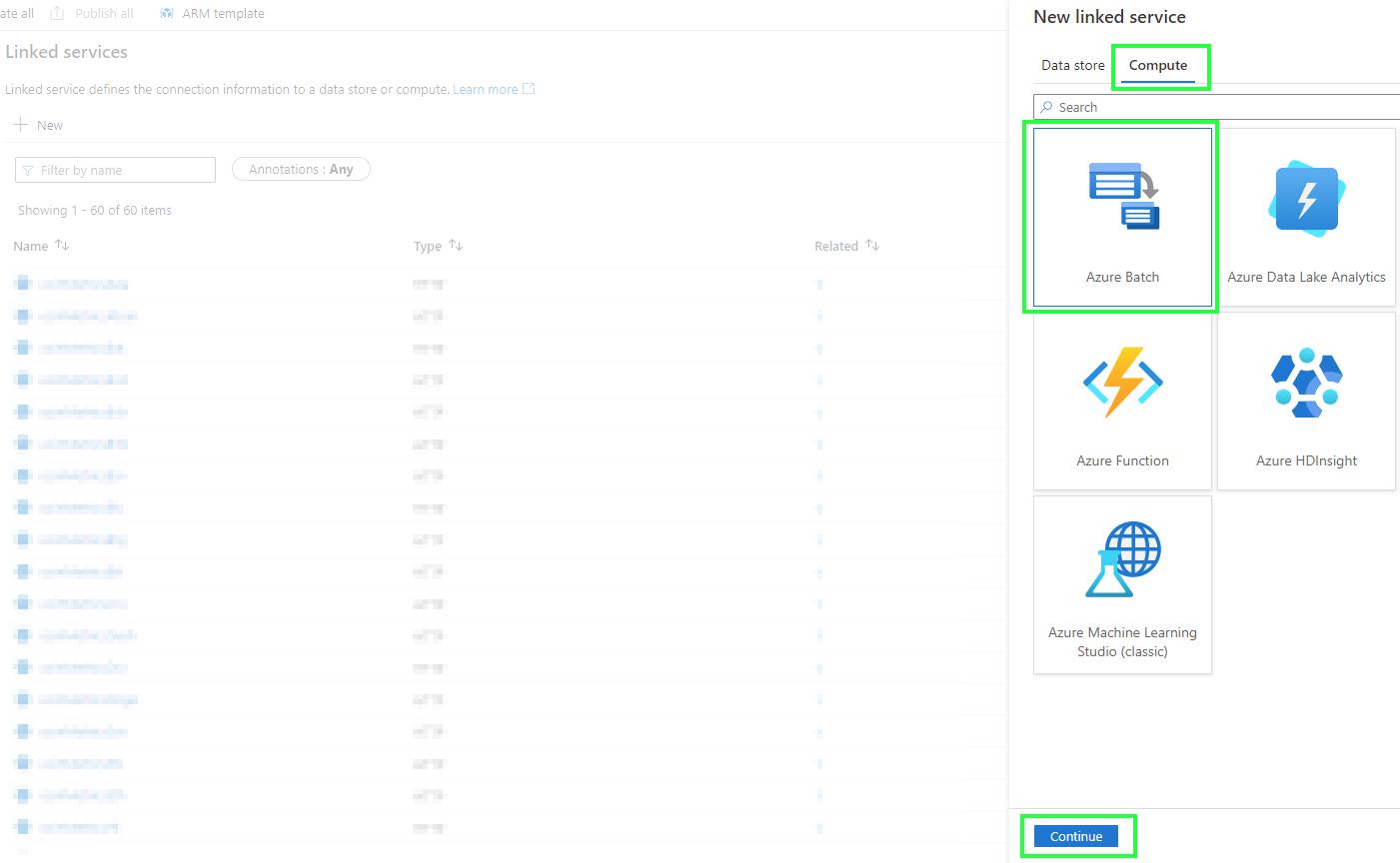

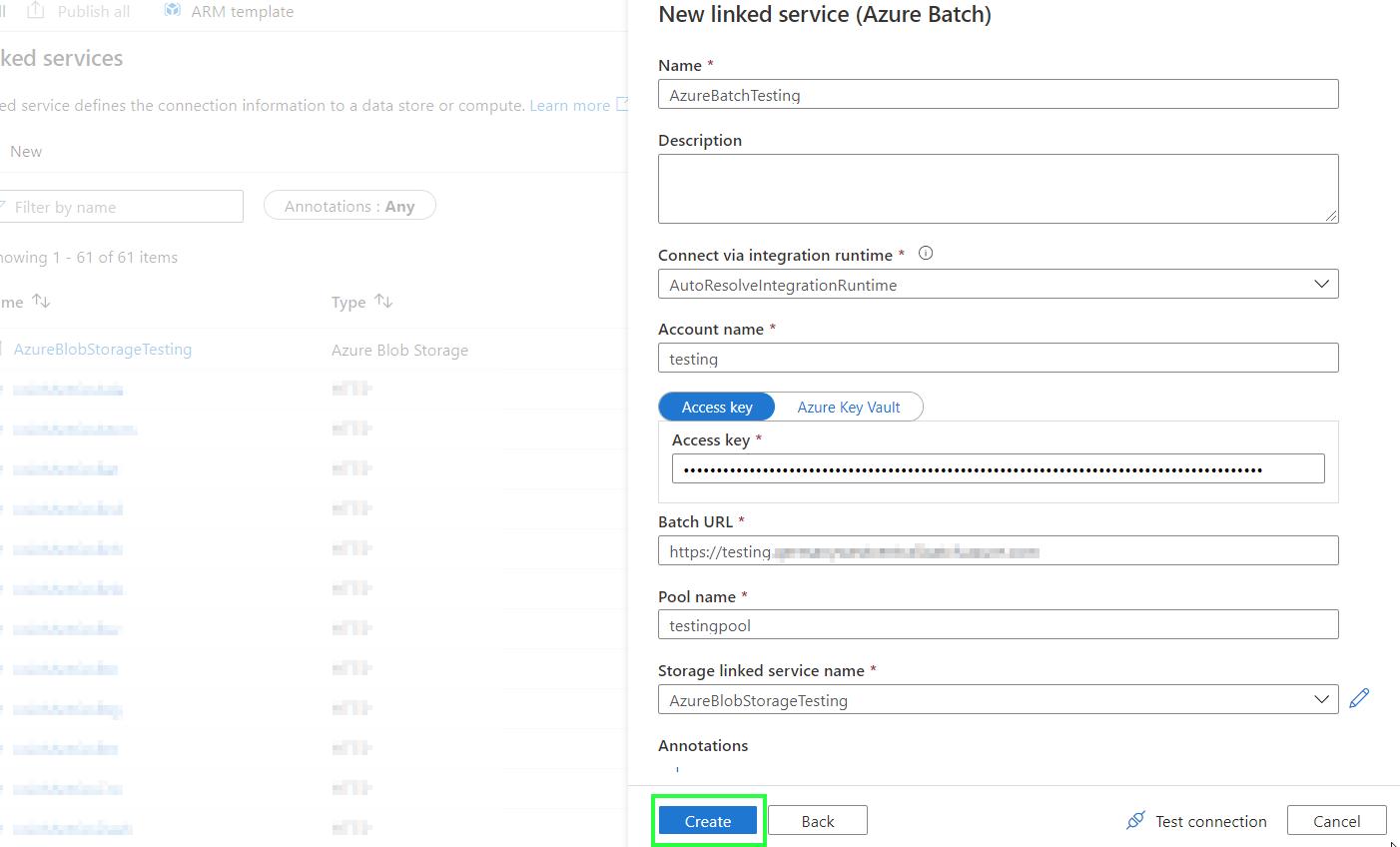

Create a new linked service by going to Linked services > +New. Under Compute services. Select Azure Batch > Continue

-

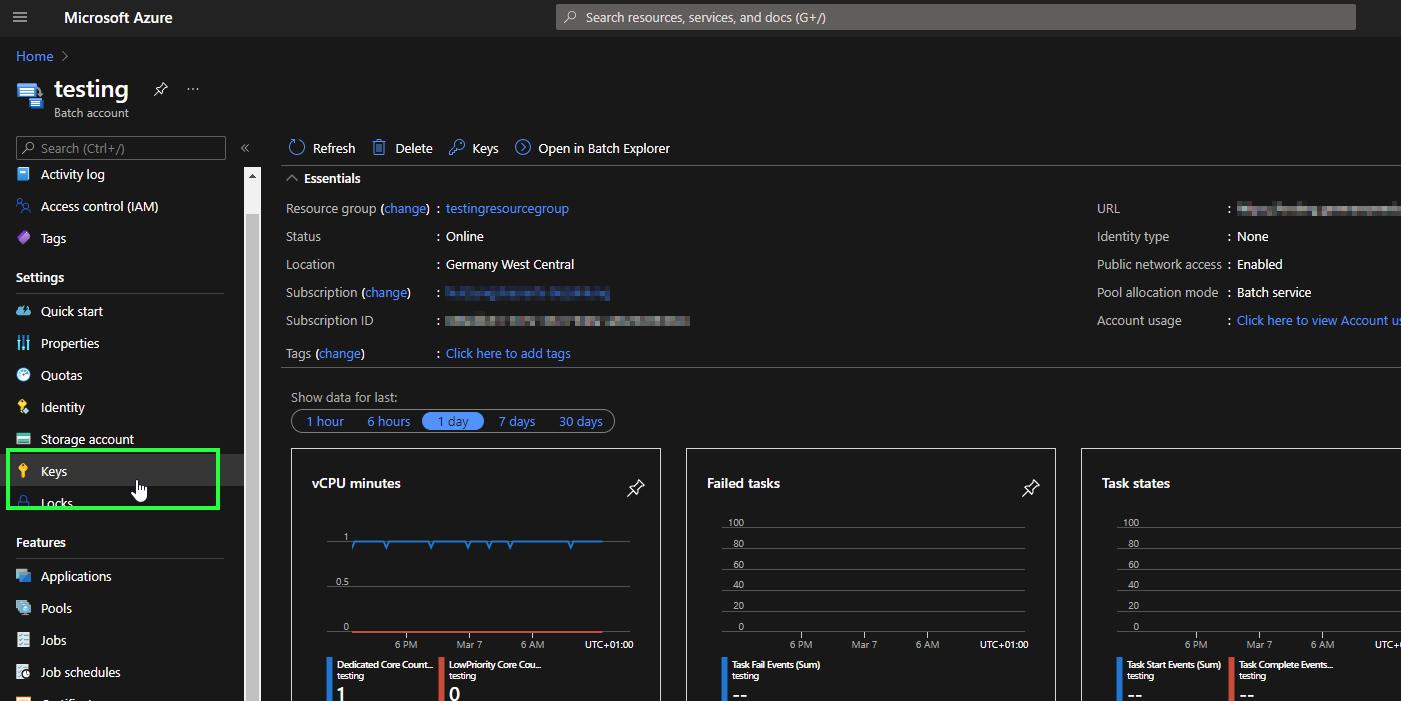

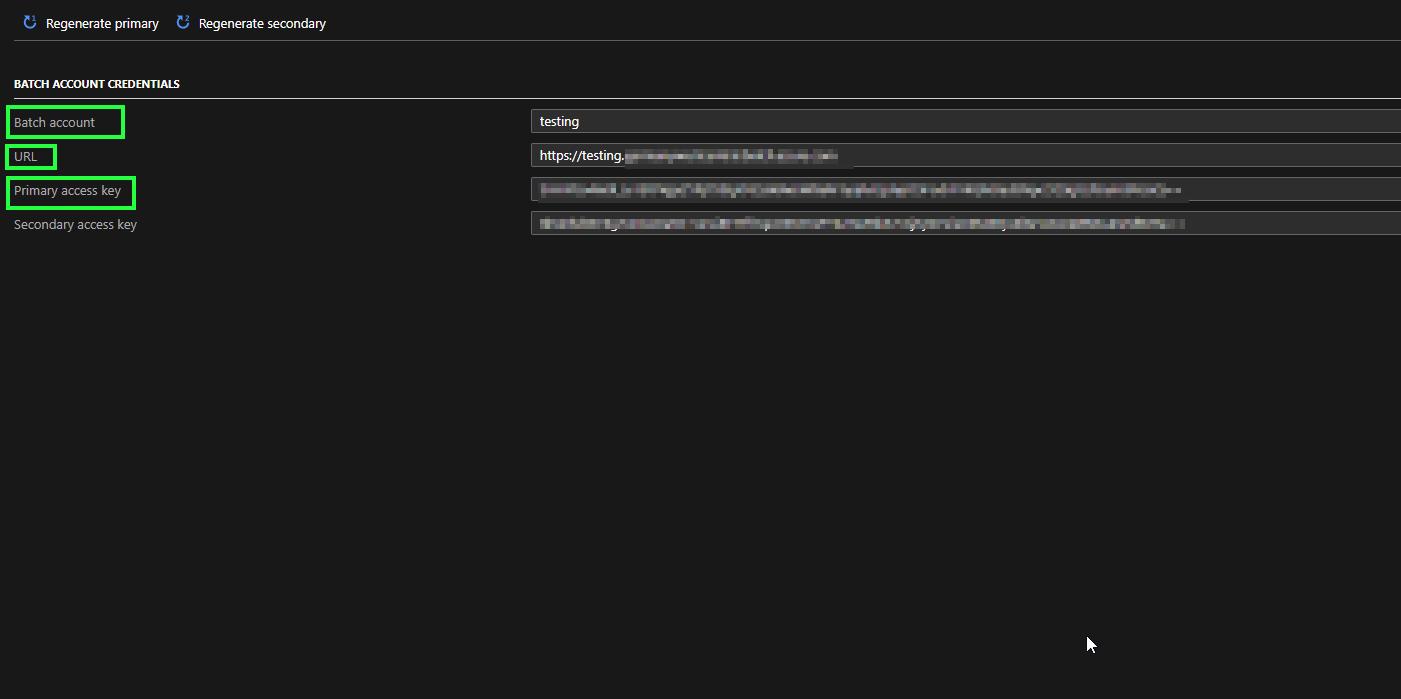

To find the required Batch information, refer to Azure Batch. Go to Settings > Keys

-

Here you will find three of the fields required: Account Name, Access Key and Batch URL

-

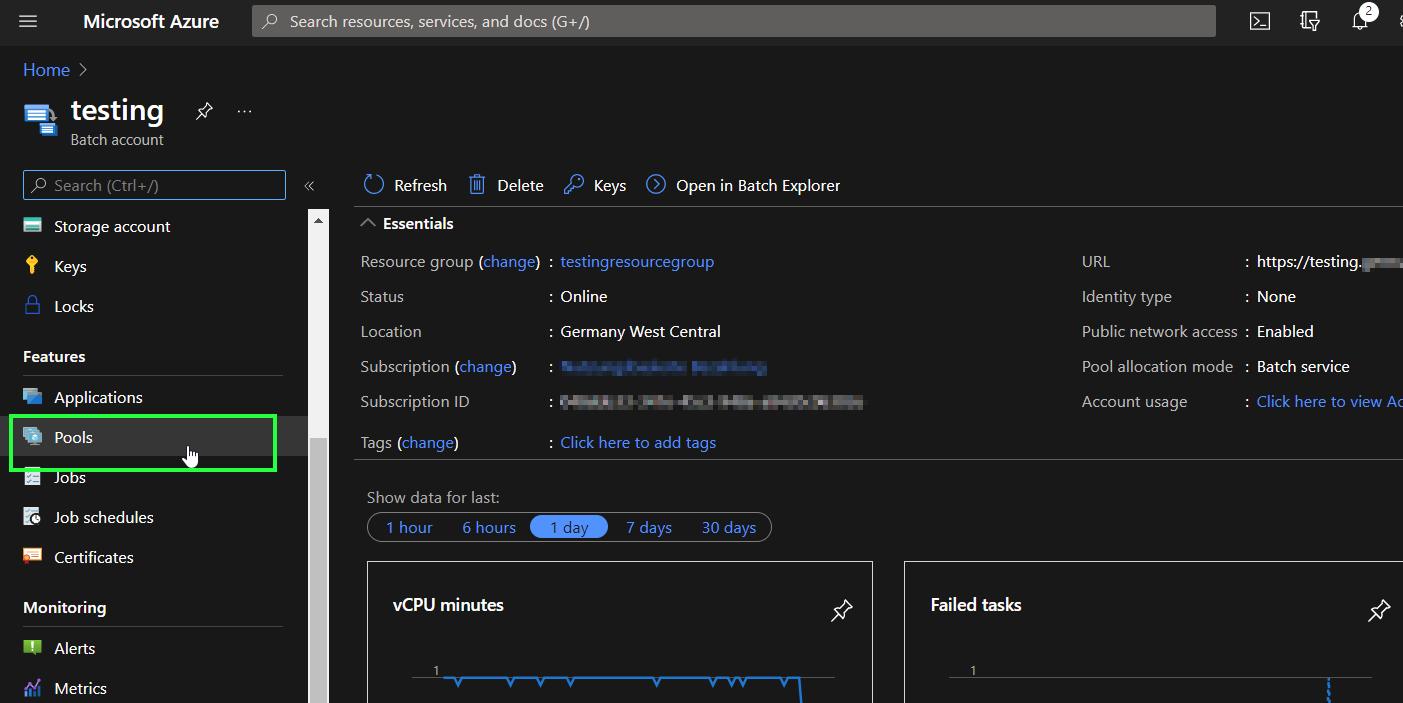

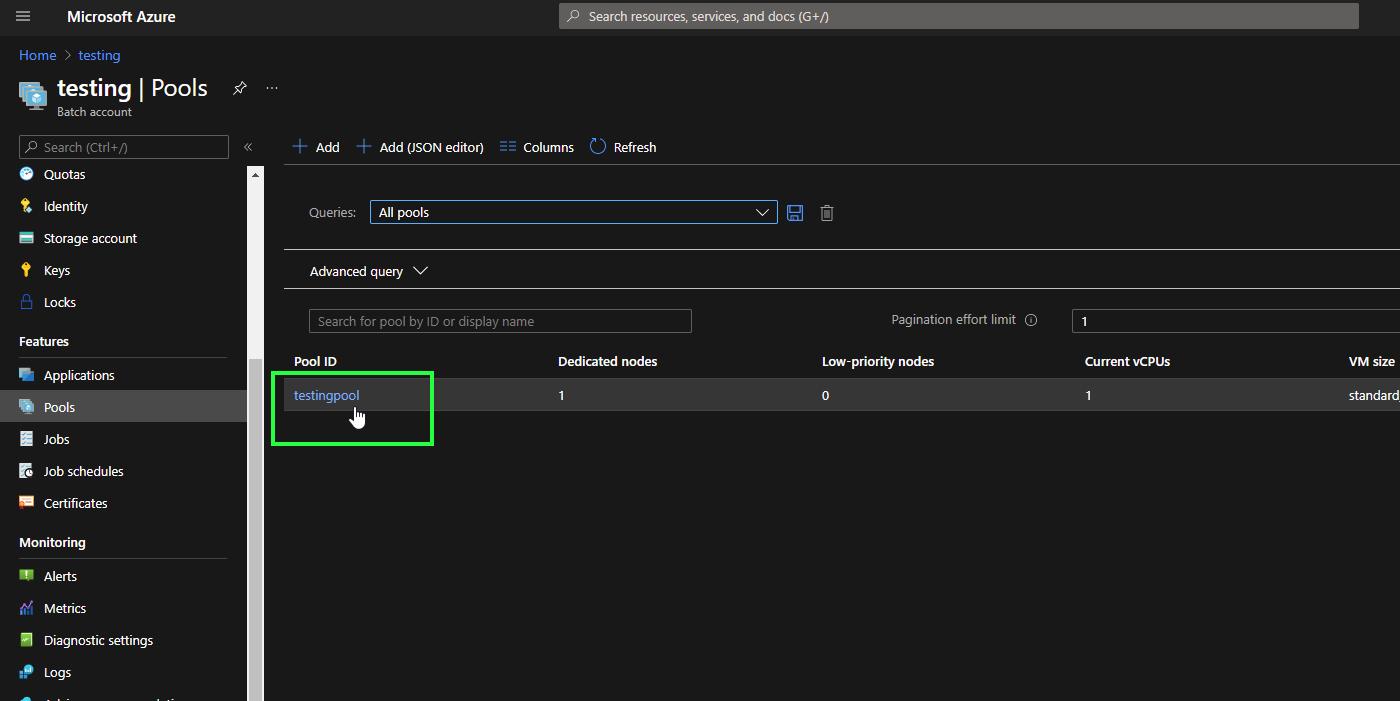

To find the Pool Name, refer to Features > Pools on the left-hand side menu from your main batch account site.

-

The Pool ID is the Pool name.

-

Go back to the ADF portal, and fill out the information required. Under Storage linked service name, select the linked service for the storage account created above. Create

Now you have created the linked services to Azure Batch and Storage. In part II, you find the steps to create and run the pipeline.